Adding Private Endpoints to resources in Azure is an excellent way to secure their connectivity by preventing access over public internet. Taking resources offline however adds its own challenges, particularly when a resources DNS changes part-way through a parallel deployment.

This article highlights the pitfalls with deploying private resources in Azure via Terraform, and how to overcome them.

This article is also my contribution to Azure Spring Clean 2023 - aimed at promoting well-managed Azure tenants.

Mission Statement

I've been brought in to help a customer with a complex Terraform deployment.

Their application infrastructure consists of an Azure Web App front-end, and multiple back-end components including Storage Accounts, Key Vaults and Cosmos DB.

Their existing Terraform is modular, however, the entire solution is deployed in one run.

The infra deployment is via GitHub Actions on a self-hosted runner, running on an Azure VM.

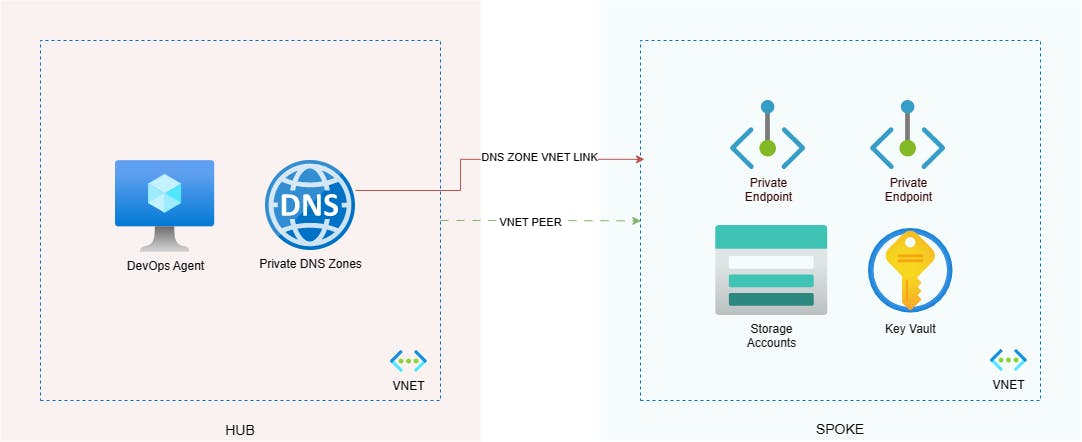

I've already worked with this customer to implement a hub and spoke network topology, where centralised resources such as the self-hosted runner and Private DNS Zones are in the hub, and the application itself is deployed as a spoke.

The app infra deployment peers the spoke VNET to the hub VNET, and links the hubs Private DNS Zones to the spoke VNET.

The infrastructure deployment is throwing a lot of very confusing errors.

My job is to solve the problem.

Replicating The Issue

The Terraform deployment is complex, but I know the errors are specific to Private Endpoints. To save time and remove complexity, I replicate the scenario with a stripped-down Terraform config, deploying a smaller number of resources, as per the below:

My Terraform deployment does the following:

Deploys the spoke VNET and a subnet called Endpoints (for the Private Endpoints).

Peers the spoke VNET with the hub VNET (and vice versa).

Links the hub Private DNS Zones to the spoke VNET.

Deploys a Key Vault with a Private Endpoint, and sets the network rules to deny public access.

Deploys a number of Storage Accounts, each with multiple blob containers, tables and queues.

Each Storage Account will need 3 x Private Endpoints, one for blob, one for table and one for queue.

The network rules for each Storage Account are set to deny public access.

The primary key for each Storage Account is saved as a secret within the Key Vault.

This is only a sample of what the wider app infra looks like.

You can see my Terraform for this in my GitHub repo, here.

What is a Private Endpoint?

A Private Endpoint adds a network interface to a resource, providing it with a private IP address assigned from your VNET. Once applied, you can communicate with this resource exclusively via the VNET.

The alternative to Private Endpoints is Service Endpoints, where the resources are still accessible over public internet, however, their integrated firewalls restrict access only to designated VNETS/subnets or public IP addresses.

Private Endpoints are more secure in this context but come with additional complexity.

Once applied with a Private Endpoint, a resources endpoint is no longer publicly routable.

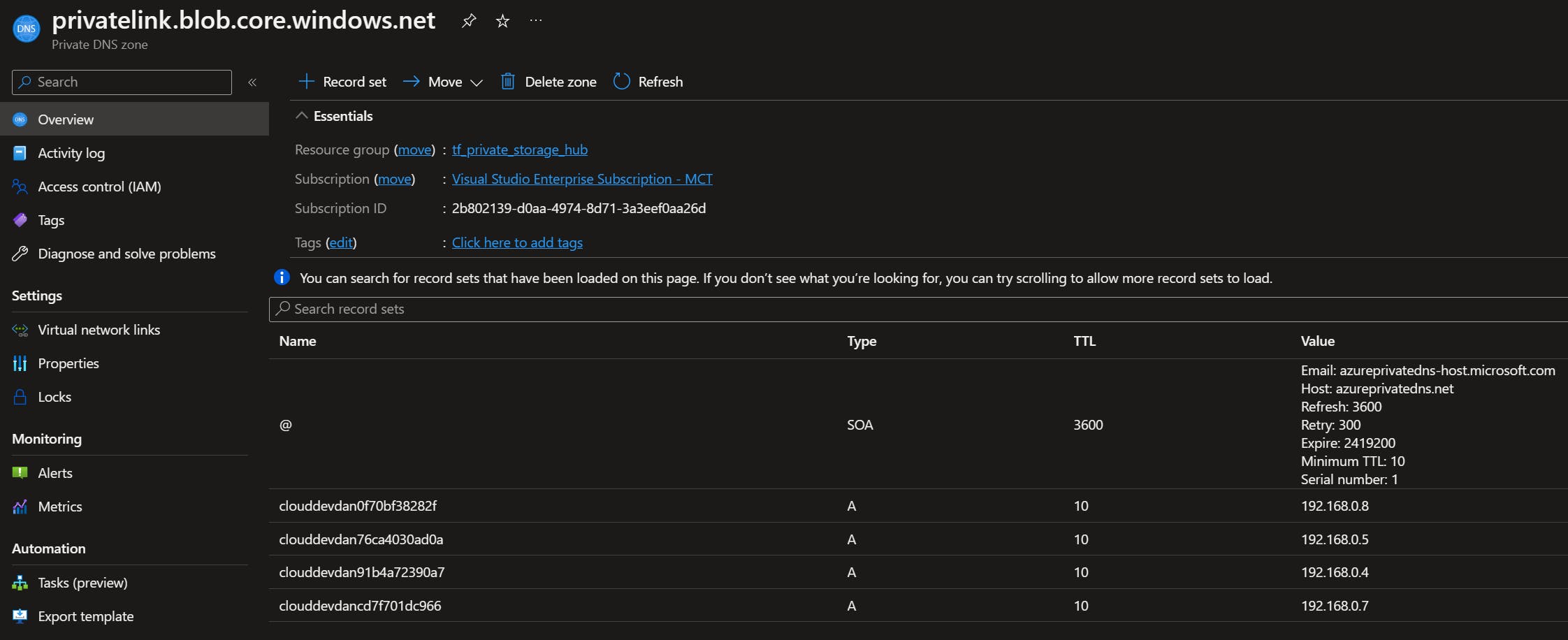

Private DNS Zones solve this issue. Linked to one or more VNETS, a Private DNS Zone holds DNS records for the private resources. When you deploy a Private Endpoint and link it to a Private DNS Zone, the resources public IP is updated with a CNAME record pointing it to the Private DNS Zone.

For example, mystorageaccount.blob.core.windows.net would point to privatelink.blob.core.windows.net, pointing it to something like 192.168.0.8.

Any VNET linked to one or more Private DNS Zones will resolve those endpoints privately.

In the context of my solution, the Private DNS Zones need to be linked to both the hub and spoke VNETS so that resources attached to either VNET can resolve private DNS requests correctly.

Below is an example of a Private DNS Zone for blob.core.windows.net:

In this solution example, the Private DNS Zones are centralised in the hub, so they can be shared amongst multiple spoke environments.

Source: What is a private endpoint?

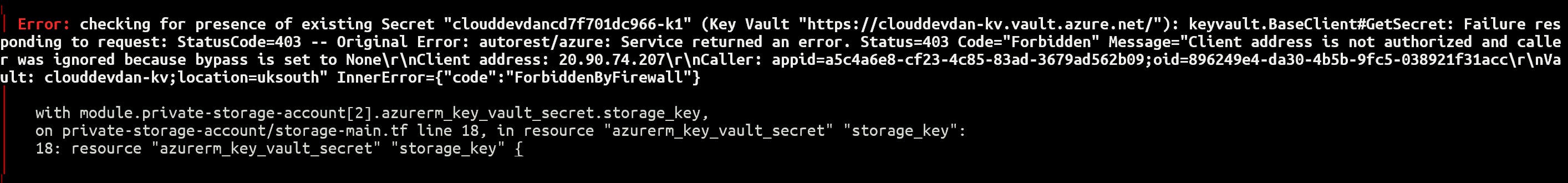

Understanding The Errors

Using my stripped-down sample, I've been able to replicate the errors my customer has been experiencing.

The one thing they all have in common is StatusCode=403 (HTTP Forbidden).

Sometimes the error is thrown when trying to save the Storage Account key as a Key Vault secret, other times it fails to create a particular blob container or storage queue, again, with a 403 error. There's no consistency - but it's clearly network related.

The interesting point here is that these errors only happen on the first run. If I re-run the entire deployment, everything works.

The issue here was clearly to do with time.

Terraform (quite rightly) is trying to deploy as much as it can in parallel whilst working out its own dependency maps.

Terraform may understand that the Key Vault needs to exist before the Storage Account key can be written as a secret, but whilst it's queuing up its list of resources to deploy their network settings are changing. What was originally publicly resolvable is now exclusively privately resolvable. The DNS has changed!

A re-run of the same deployment does not replicate the issue as Terraform can determine the network change, but also the self-hosted runner can pick up on it too.

I have a distant memory of something like this happening in Bicep a while ago, but I can't recall the specifics. I have a suspicion the underlying issue could be down to the Azure APIs under the hood. Either that or it's simply a race condition issue, or possibly DNS caching, either on the runner or in Terraform itself.

I can't be certain of the exact root cause on this one, but I'm going to blame it on DNS...

Considering My Options

My first instinct was to split this rather large and complex deployment into multiple, smaller deployments according to resource lifecycle. For example, different Terraform deployments for the VNET, Key Vault and Storage Accounts.

Whilst this would have been a relatively simple job for my stripped-down sample, the customer's full-blown solution was far more complex and would have required significant re-work.

The issues would have been distributing the config between multiple deployments, and capturing the outputs of one deployment as input for another over a matrix of linked resources and modules.

I considered tools such as Terragrunt to centralise the config, but the customer wasn't keen.

I needed to get my hands dirty with some Terraform!

A Tale of Time

The code for my working solution can be found in my GitHub repo, here.

The first thing I did was determine the order in which resources were created. Primarily within the Storage Account module.

For example, I used depends_on blocks to specify that the Private Endpoint goes on only after the containers, tables and queues have been created. The resource to deny all public access to the Storage Account was set to go on last.

resource "azurerm_storage_account_network_rules" "example" {

storage_account_id = azurerm_storage_account.example.id

default_action = "Deny"

ip_rules = []

bypass = ["None"]

depends_on = [

azurerm_storage_container.example,

azurerm_storage_queue.example,

azurerm_storage_table.example,

azurerm_private_endpoint.private_endpoint

]

}

This helped, but I was still getting some ad-hoc 403 errors. I needed to allow time for the VNETs to peer, and DNS to propagate.

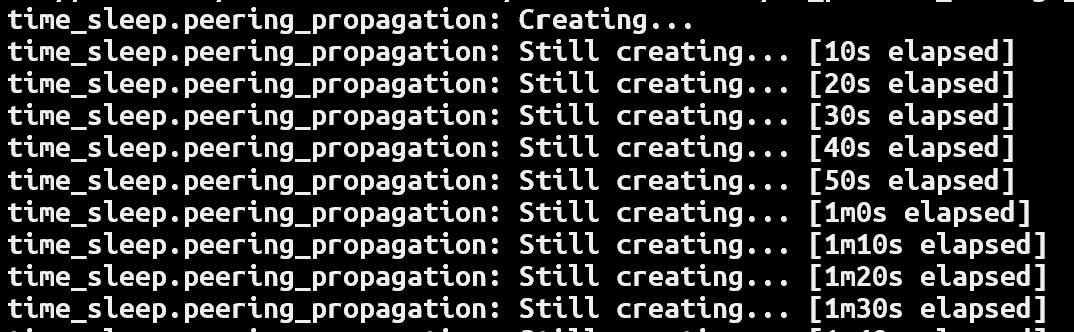

I solved this by adding some cheeky little sleep timers, using the Terraform time_sleep resource.

resource "time_sleep" "peering_propagation" {

create_duration = "2m"

triggers = {

peering_confirmation = module.vnet.peering_confirmation

}

}

Within the VNET module, I set an output of the ID provided from the peering with the HUB VNET. Once the peering had been completed and the ID was produced, Terraform then waits for 2 minutes.

I did the same thing with the VNET links to the Private DNS zones in the hub, and also the creation of the Key Vault. For example:

resource "time_sleep" "vault_endpoint_propagation" {

create_duration = "2m"

triggers = {

propagation = module.private-key-vault.vault_endpoint_propagation

}

}

Terraform will wait for 2 minutes once the Private Endpoint resource ID has been produced.

To determine the order in which my modules were deployed (e.g. don't run the Storage Account module until the Key Vault has a Private Endpoint applied), I used the depends_on block again, but this time linked to the sleep timer.

module "private-storage-account" {

source = "./private-storage-account"

count = var.resource_count

tags = local.tags

app_name = local.app_name

location = local.location

resource_group_name = azurerm_resource_group.rg.name

virtual_network_name = module.vnet.spoke-vnet-name

endpoints_subnet_id = module.vnet.endpoints-subnet-id

private_dns_zone_ids = data.azurerm_private_dns_zone.dns_zones

key_vault_id = module.private-key-vault.key_vault_id

depends_on = [

time_sleep.vault_endpoint_propagation

]

}

Now, the Storage Account module will not run until the Key Vault has a Private Endpoint, and two minutes have passed, allowing time for DNS propagation.

Thankfully both mine and the customer's Terraform config is modular. So any module that needs access to the Key Vault, or to otherwise resolve private DNS can be set to wait until the prerequisite resources have been deployed first.

It's not pretty, but it works!

Testing The Solution

By running the fixed Terraform config and monitoring the output, I can clearly see the resources are being deployed roughly in the right order of what I set. Anything that can be done in parallel will be handled by Terraform.

The sleep timers add some delay to the total time the deployment takes to complete, but it's better than waiting for it to fail and having to re-run it every time.

Another thing to note here is that before my fix was applied, Terraform destroy would also throw similar 403 errors. Following my fix, this is no longer an issue

Top Tips

These are some tips I've picked up whilst working on this solution:

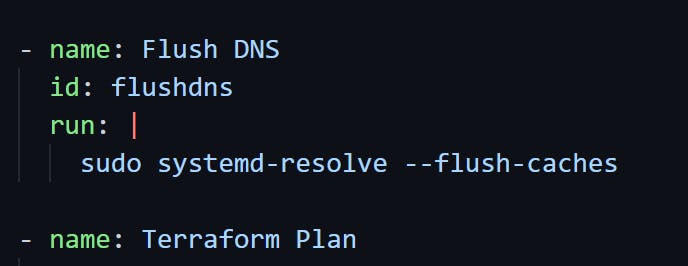

- If your GitHub Actions run on a self-hosted runner, add an action to flush DNS before running a plan or apply. If DNS changes have been made on the network (such as adding Private DNS zones) - this will clear things up. For example:

Use command line tools (on the runner itself) to check the DNS resolution of a particular endpoint, for example:

nslookupclouddevdan-kv.vault.azure.netIf your Terraform code needs to interact with resources in a different subscription or resource group (such as with my example, performing write actions on the hub VNET by establishing a peer), you can use an additional Terraform

azurermprovider and use it to specify different credentials and tenant/subscription IDs. For example:

# provider.tf

provider "azurerm" {

alias = "hub"

subscription_id = var.hub_subscription_id

tenant_id = var.az_tenant_id

client_id = var.azure_cli_hub_networking_client_id

client_secret = var.azure_cli_hub_networking_secret

features {}

}

# vnet module call in main.tf (referencing the additional provider with alias "hub" (set above)

module "vnet" {

source = "./vnet"

resource_group_name = azurerm_resource_group.rg.name

location = local.location

tags = local.tags

virtual_network_name = local.virtual_network_name

hub_resource_group_name = data.azurerm_resource_group.hub-rg.name

hub_virtual_network_name = data.azurerm_virtual_network.hub-vnet.name

hub_virtual_network_id = data.azurerm_virtual_network.hub-vnet.id

address_space = local.address_space

endpoints_subnet_name = local.endpoints_subnet_name

endpoints_subnet_address_space = local.endpoints_subnet_address_space

providers = {

azurerm.hub = azurerm.hub

}

}

# within the vnet module main.tf, set:

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "=3.47.0"

configuration_aliases = [ azurerm.hub ]

}

}

}

# peering hub to spoke vnet using the "hub" provider

resource "azurerm_virtual_network_peering" "hub-to-spoke" {

provider = azurerm.hub

name = "hub-to-${var.virtual_network_name}"

resource_group_name = var.hub_resource_group_name

virtual_network_name = var.hub_virtual_network_name

remote_virtual_network_id = azurerm_virtual_network.vnet.id

allow_virtual_network_access = true

allow_forwarded_traffic = true

allow_gateway_transit = false

depends_on = [

azurerm_virtual_network_peering.spoke-to-hub

]

}

Conclusion

What I've learnt from this is that you can't always apply best practices.

No matter how much you may want to, sometimes your hands are tied and you need to make good on a tricky situation.

My solution may appear to be a tad hacky, but from a bigger picture, it's relatively simple. All I'm doing is controlling the order in which some Azure resources are deployed in Terraform, and adding in small time delays in between some of them to account for network changes.

Thanks for reading!

All my source code is available within this GitHub repo.

Source of cover photo by Icons8 Team on Unsplash