Following on from my previous article Private Endpoints and Terraform - A Tale of Time - I want to go into more detail about what my experience working with Private Endpoints has been like.

The use of Private Endpoints is an increasingly popular choice, and Microsoft suggest their use is considered best practice (ref), however, I feel a lot hinges on what your definition of the word 'private' really means.

In this article, I’ll go over what Private Endpoints are when compared to Service Endpoints and will provide examples of what I feel are the realities when working with them.

What is a Private Endpoint?

To avoid repeating myself, the below is an extract from my Tale of Time article (link above) that covers how I defined what a Private Endpoint is:

A Private Endpoint adds a network interface to a resource, providing it with a private IP address assigned from your VNET (Virtual Network). Once applied, you can communicate with this resource exclusively via the VNET.

The alternative to Private Endpoints is Service Endpoints, where the resources are still accessible over the public internet, however, their integrated firewalls restrict access only to designated VNETS/subnets or public IP addresses.

Private Endpoints are more secure in this context but come with additional complexity.

Once applied with a Private Endpoint, a resources endpoint is no longer publicly routable.

Private DNS Zones solve this issue. Linked to one or more VNETS, a Private DNS Zone holds DNS records for the private resources. When you deploy a Private Endpoint and link it to a Private DNS Zone, the resources public IP is updated with a CNAME record pointing it to the Private DNS Zone.

For example, mystorageaccount.blob.core.windows.net would point to mystorageaccount.privatelink.blob.core.windows.net, pointing it to something like 192.168.0.8.

Any VNET linked to one or more Private DNS Zones will resolve those endpoints privately.

Source: What is a private endpoint?

The Realities

With Service Endpoints, a PaaS resource is still publicly available. You can configure inbound traffic to come in either from designated public IPs or from within the VNET itself. The resource itself is still accessible online.

With a Private Endpoint, however, there is no option to allow designated public IPs. Traffic comes in from the VNET, or not at all.

Because of this, performance may be improved by reducing network latency as communication stays within the Microsoft network backbone.

Also note that you can apply network policies for Private Endpoints (NSGs and Route Tables on the subnet hosting them) so you can get granular control over your network traffic.

In a nutshell, using a Private Endpoint provides the most private and granular level of network access to a resource. But what does private in this context really mean, and do the cons of using a Private Endpoint outweigh the pros?

Consider the below:

Control Plane vs Data Plane

It's important to note the difference between control plane and data plane operations.

For example, let's presume we have an Azure Storage Account with a Private Endpoint applied for the blob endpoint. A control plane operation would be something like using the Azure CLI to return the account keys. A data plane operation would be something like listing blobs within a private container.

With a Private Endpoint applied for the blob endpoint, you can perform control plane operations regardless of your presence on the VNET. Yes, you need to be authenticated, but your source IP does not matter in this context.

With a data plane operation, however, you can only perform operations if you are coming at it from within the VNET.

I point this out here to highlight that adding a Private Endpoint does not make the resource totally private, only specific operations.

This is important when setting expectations.

DevOps & IaC

From a DevOps perspective (i.e. using pipelines to deploy infrastructure-as-code), you'll likely need to use self-hosted runners that have network connectivity onto the VNET on which the Private Endpointed resources are going to reside. Using a hub and spoke example you could deploy a VM within the hub that hosts the DevOps agents, ensuring the hub VNET is peered and routable to the spoke VNETS that have the Private Endpointed resources on.

As per my article Private Endpoints and Terraform - A Tale of Time, the automated deployment of resources with Private Endpoints introduces further complexity when the likes of Terraform try to deploy resources in parallel but get chewed up when a resources network config changes part way through.

Network Complexities

Imagine you have a hub and spoke network topology. In your hub, you have a VNET and shared Private DNS Zones. There are multiple spokes that each have their own VNET, including resources with Private Endpoints applied.

The hub VNET would need to peer with each spoke VNET, and every Private DNS Zones would need to be linked to the hub VNET and every single spoke VNET.

Best practice would dictate that there are granular permissions in place to separate the hub from the spokes. For example, the hub and each spoke could be on dedicated subscriptions with individual RBAC policies applies.

This may sound fairly straightforward, but consider how a Terraform deployment would deploy a spoke environment. The deployment would need to be on a self-hosted runner, likely in the hub to facilitate network connectivity. The Terraform deployment would also need to utilise multiple identities (e.g. Service Principals) to have the correct RBAC level access to both the hub and the spoke.

Costs

A single Private Endpoint costs approx £7.55 per month. Multiply that by how many resources you plan to add a Private Endpoint to, over how many environments you need to deploy, and the result can be expensive. Especially when you consider resources like Storage Accounts that have multiple endpoints (blob, table, file, queue) and therefore need multiple Private Endpoints for every endpoint used.

Furthermore, a single Private DNS Zones also costs approx £7.55.

Source: Pricing calculator

Examples

What I want to do here is demonstrate some of the realities of working with Private Endpoints, using a Storage Account as an example.

Core Infrastructure

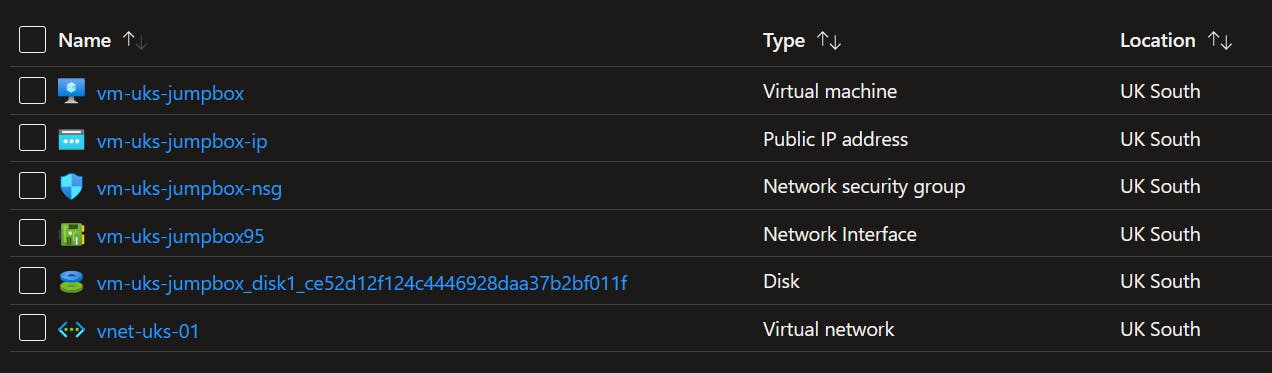

My core infra consists of a resource group called rsg-pe-testing and a VNET called vnet-uks-01. Within the VNET I've created a dedicated subnet for all Private Endpoints.

I've also created a Virtual Machine called vm-uks-jumpbox, attached the to above VNET. I'll be using this as a point of access to my VNET. I opted for a Ubuntu 20.04 VM so I don't have to faff about with an OS GUI and I can jump right into the CLI.

Storage Account

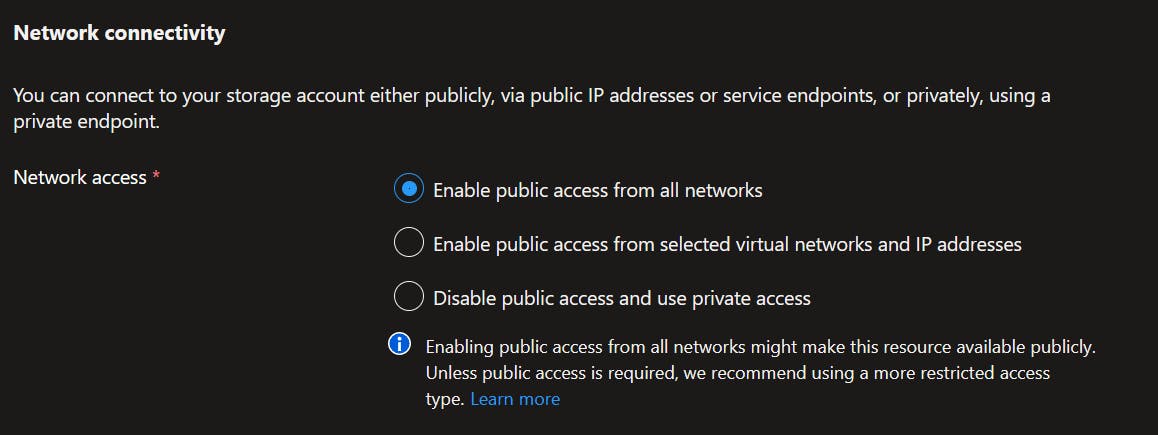

For my first example, I've created an LRS Storage Account called strukspetestingdmclo01. By default, I've left the network connectivity open to public access.

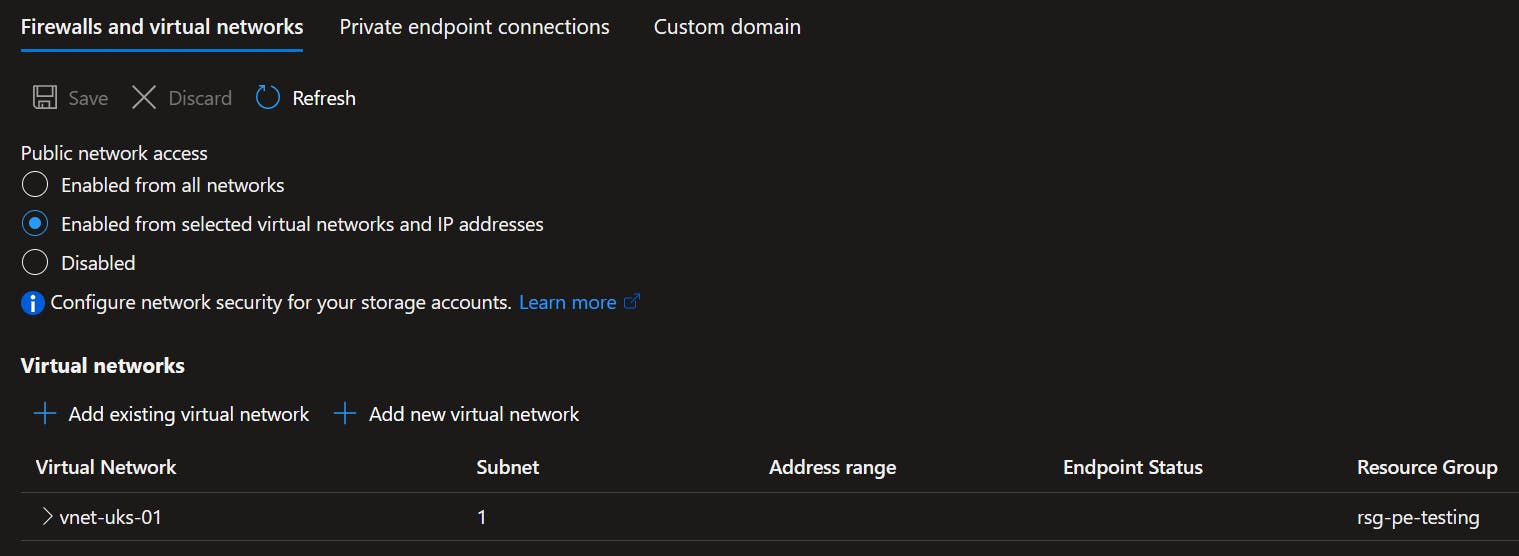

Before we move on, I want to talk about the above screen a little bit. I interpret the middle option 'selected virtual networks and IP addresses' to be related to public internet access and Service Endpoints. This is where direct internet access is still routed to the Storage Account through whitelisted public IPs or via Service Endpoints on the Microsoft backbone.

I interpret the bottom option as the one to select if I wanted to use a Private Endpoint i.e. public internet is outright blocked and the only allowed inbound traffic is from within the VNET itself.

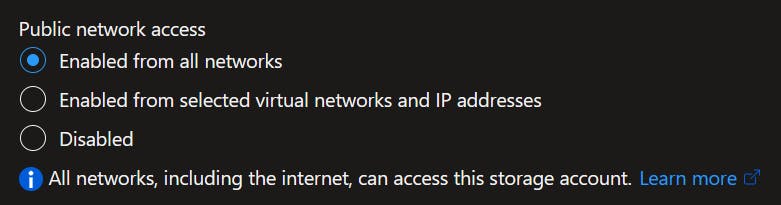

Note the text in the above screenshot is from the Azure portal when first creating the Storage Account. Once within the Storage Account page itself, under the Networking blade, that option simply says 'Disabled'.

Under the Endpoints blade of the Storage Account in the Azure portal, I can pull the different endpoints for the Storage Account, such as Blob and Table. As expected, these currently resolve to a public IP address from both within and external to my VNET.

Service Endpoints

To prove the point that the Storage Account is still exposed over the public internet, I enable the Microsoft.Storage Service Endpoint on the subnet that the VM is running on and configured my Storage Account to only allow traffic from that specific subnet. This can be a faff to do in the portal as if you forget to click on the Save button, your changes won't be applied!

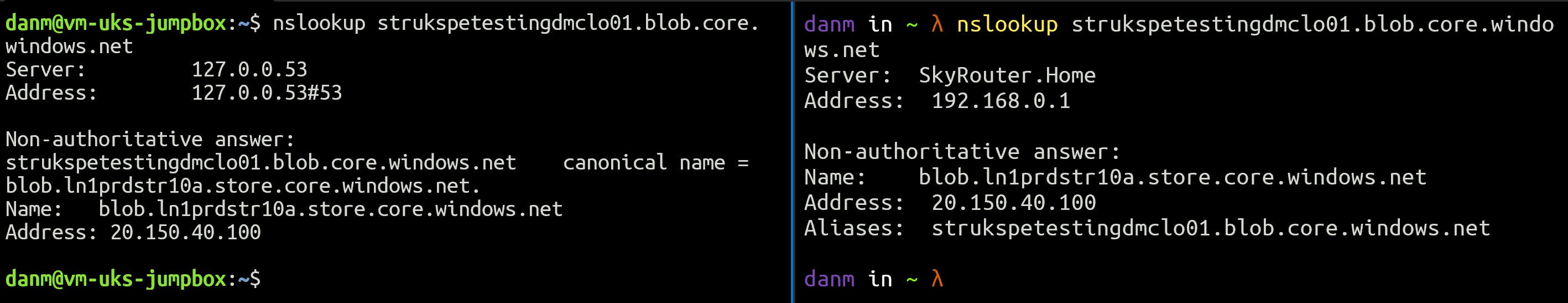

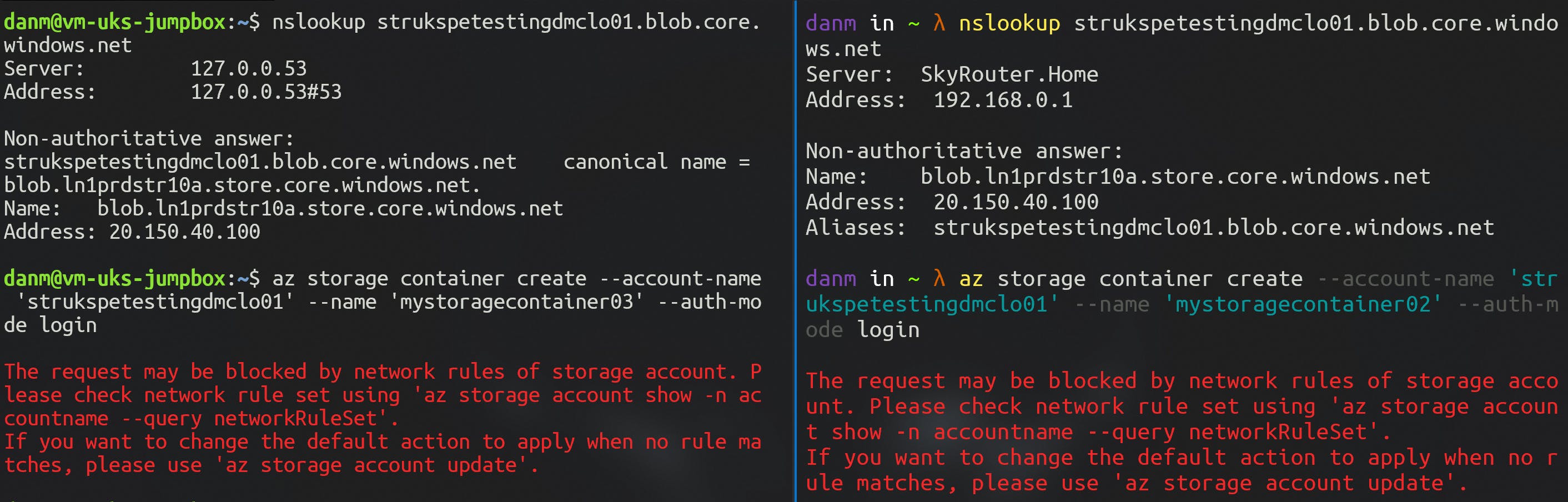

Once applied, the nslookup command both internally and externally still resolves the public IP address of the Storage Account - as expected here.

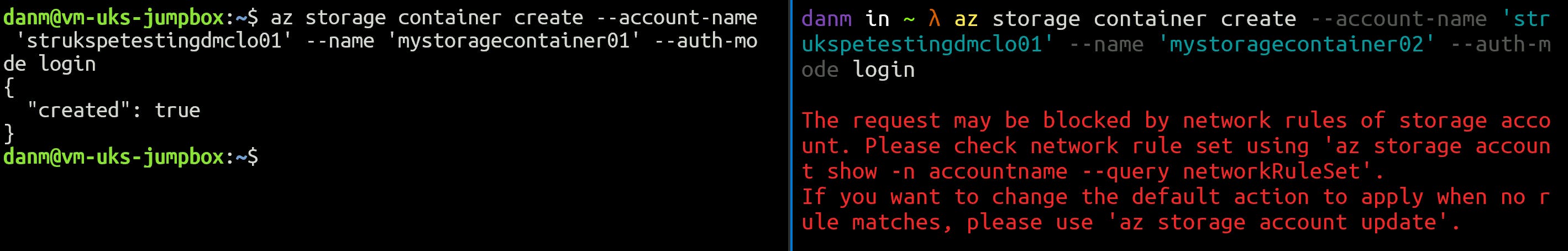

To further prove the point, I used the Azure CLI on both the Jump Box (inside the VNET - left pane) and my laptop (outside the VNET - right pane) to try and create a new blob container. As expected, it worked from within the VNET, but not from the outside. Creating a blob container is a data plane operation.

Note that the VM has a Managed Identity assigned and both that, along with the user account from my laptop have the Storage Blob Data Owner RBAC role applied to the specified Storage Account.

Private Endpoints

Next, let's see what happens when I simply click the 'Disable' radio button on the Networking blade (before any Private Endpoints have been created).

What I'm expecting to see here is an outright block of any network ingress to the Storage Account.

As you can see, the nslookup command still resolved the endpoint DNS to a public IP address, but the Azure CLI command to create a new container is blocked both internally and externally due to the network rules.

This suggests to me that the Storage Account endpoint is still resolvable, but not routable. What I understand to be happening is that all traffic (regardless of source) is still hitting the Storage Account directly, but it's the integrated firewall that's blocking the requests.

Now (clearly) I'm not a network expert, far from it, but this doesn't sit right with me. If I wanted my Storage Account to be totally private and only accessible over the VNET, I'm not sure I'd be happy about this. In theory, I could run a denial-of-service attack on the Storage Account as it's still reachable online.

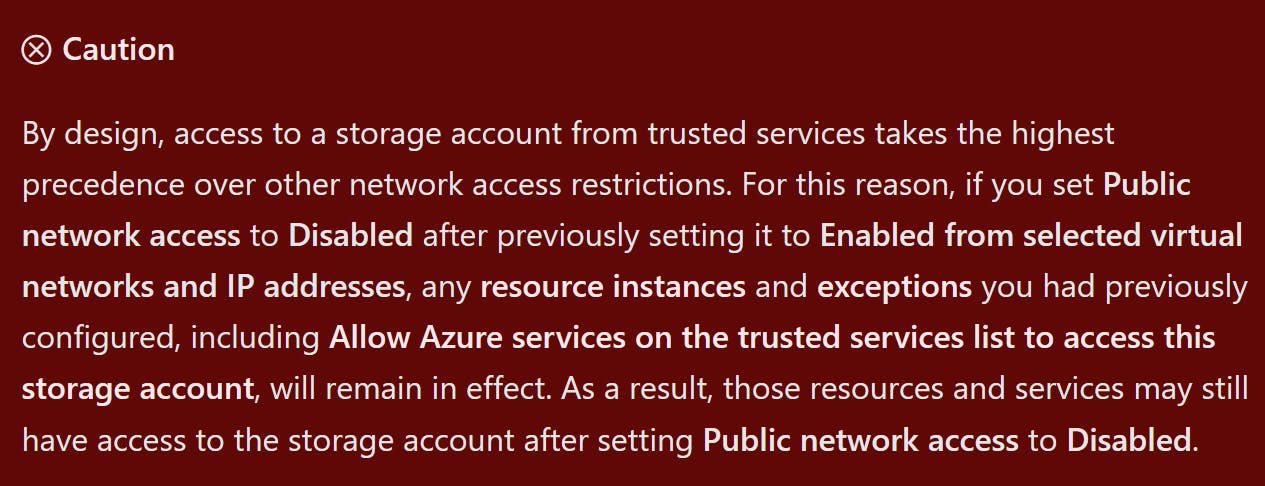

I turned to the docs to see if there was anything official to back this up, but all I came out with was the below:

I created a new storage account called strukspetestingdmclo02 with networking disabled during the creation wizard in the portal, however, this yielded the same results.

Let's see what adding a Private Endpoint does to this. After all, in the above test I am hitting the public endpoint, so let's flip this and run the same tests.

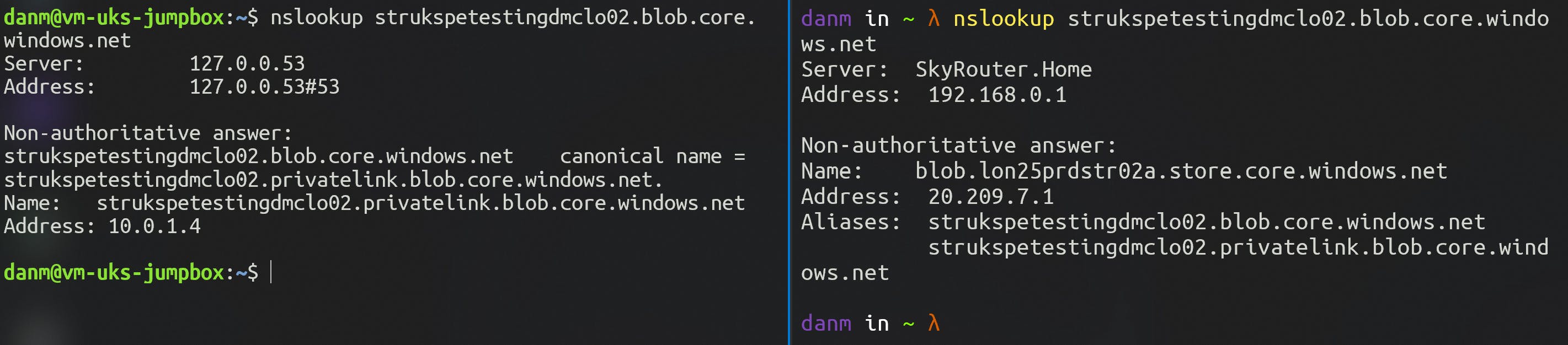

For strukspetestingdmclo02 I create a new Private Endpoint and a Private DNS Zone for privatelink.blob.core.windows.net. The Private DNS Zone is linked to my VNET, and I can see that the blob endpoint for my Storage Account has been assigned an internal IP of 10.0.1.4.

Running nslookup on the Jump Box returns the private IP as expected, but my laptop returns the public IP still.

Trying to create a new container yields the same results too. It works from the Jump Box, but my laptop is blocked by the network rules applied to the integrated firewall, not because it cannot reach it. Again, this is a data plane operation. A control plane operation such as returning the account keys still works from both devices.

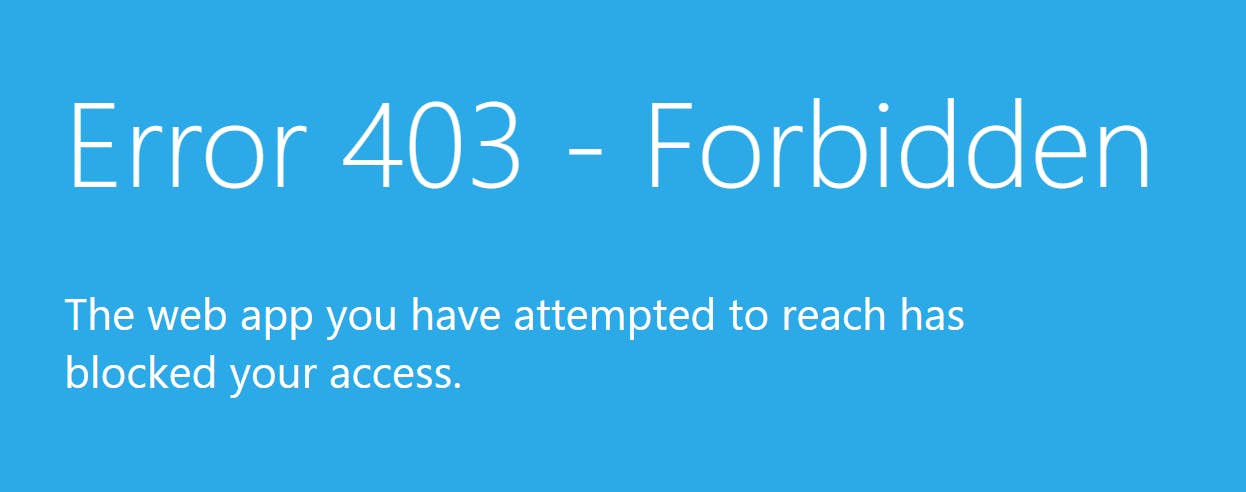

Repeating the same tests on an Azure Function app confirms my theory. With public access enabled, the default landing page is displayed from my laptop:

However, with a Private Endpoint applied, I am presented with a 403 Forbidden error. I'm still hitting the Function App directly, but its integrated firewall is blocking my requests - it's not that I outright cannot route to it.

Summary

So what am I getting at here exactly?

First of all, I've demonstrated that the use of Private Endpoints, or simply setting a resource to Disable public access doesn't actually disable public access. Not in the true sense of the word. Public traffic still reaches the resource, it's just blocked by an integrated firewall.

In my mind I presumed this would work in the same way as deleting a Public IP address from a VM would work i.e. public traffic cannot in any way shape or form hit the resource.

Also note that a Private Endpoint will only protect data plane operations, not the control plane.

To be nit-picky, a Private Endpoint is not actually private. It's just more private that the other options!

This may be an important consideration when working with security-conscious customers. Customers like these may wrongly presume that following Microsoft's best practices and adding Private Endpoints will totally render their environment offline, which isn't strictly true.

Private Endpoints are the most private and granular level of network access you can define on an Azure PaaS resource, but the term 'private' needs to have the correct expectations set.

Conclusion

In summary, Azure Private Endpoints and Service Endpoints both offer secure communication between virtual networks and Azure services, but they differ in their approach and benefits.

Private Endpoints provide a more secure and efficient communication option but introduce added costs and complexities. Also, be clear on the term 'private', as it can mean different things to different people.

Service Endpoints, on the other hand, are easier to configure and support, but do not provide as private connectivity to Azure services as Private Endpoints would.

When choosing between Private Endpoints and Service Endpoints, organisations should consider their security and performance needs, as well as the specific Azure services they plan to use. With the right configuration, both options can help organisations achieve a secure and efficient cloud environment.

Feedback

Your feedback is welcome here. Networking is out of my comfort zone, and the above article is based on my experiences and opinions. Please feel free to comment or get in touch. Thank you.