Table of contents

I've used and abused containers for a while now, often throwing myself into container based projects head-first - learning as I go. Because of this, I suspect my knowledge in this area isn't particularly well-rounded. Therefore, what I've decided to do is put myself through more formal training, starting with Docker, then moving on to Azure Container Instances, Container Apps, and AKS.

This is the first in a series of planned articles to document my learning journey. These articles will act as a record of my learning for future reference, which I'm sure future me will find useful, and maybe you will too.

I'm starting with Docker, as I feel a basic knowledge of containers will underpin the entire venture.

As an added twist for this particular, article, I'll be using ChatGPT as an instructor!

Why? Well, having watched the below video, I felt inspired to give it a go and thought this could be the perfect opportunity to do so.

It should also prove to be quite fun! 😆

I'll be backing up what ChatGPT tells me with articles from Microsoft Learn and the official Docker docs pages - to be on the safe side!

What do I want to learn?

There's no point starting a new learning journey without first deciding what it is you want to learn in the first place. In the context of refreshing my Docker knowledge, I want to cover the following:

What is Docker

Building an image

Image tags

Running a container

Adding storage

Networking

Accessing a running container

Logs

Pushing/pulling to/from Docker Hub

Pushing/pulling to/from Azure Container Registry

Container security

CI/CD

Windows vs Linux containers

Docker in Azure

Software

For your reference (and my own) I'm running Docker Desktop on my Windows 11 laptop and will be interacting with the Docker CLI though PowerShell 7. At the time of writing, the Docker version is 20.10.21.

What is Docker

Dan: What is Docker?

ChatGPT:

Docker is a platform and tool that allows developers to easily create, deploy, and run applications in containers. Containers are lightweight, portable, and self-sufficient environments that allow applications to run consistently across different systems. Docker provides a simple and consistent interface for managing these containers, making it easier for developers to build, test, and deploy their applications.

Dan: Why use containers?

ChatGPT:

Containers provide a number of benefits over traditional virtualization or bare-metal deployments, including:

Portability: Containers allow applications to run consistently across different environments, such as development, testing, and production.

Isolation: Containers provide a level of isolation for the application and its dependencies, which helps to prevent conflicts and reduce the risk of system-wide issues.

Resource efficiency: Containers are lightweight and consume fewer resources than traditional virtual machines, which can result in cost savings and improved performance.

Scalability: Containers can be easily scaled up or down as needed, making it simpler to manage and deploy large-scale applications.

Flexibility: Containers can be used with a variety of different technologies, such as microservices, cloud-native applications, and more.

Improved security: Containers provide an additional layer of security by isolating applications and their dependencies, which can help to reduce the attack surface of a system.

This is awesome! Also, if you pair this with the What is Docker? page from Microsoft Learn, the content compliments each other really well.

Dan: How do Docker images work?

ChatGPT:

Docker images are the building blocks of containers in Docker. They are a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

An image is created using a "Dockerfile", which is a script that contains instructions on how to build the image. The Dockerfile specifies the base image to use, any additional software to install, environment variables to set, and other configuration options. Once the Dockerfile is created, it is used to build the image using the Docker command-line interface (CLI).

Once an image is built, it can be stored in a registry, such as Docker Hub, where it can be easily shared and reused by other developers. When a container is created from an image, Docker takes the image and adds a read-write layer on top of it, allowing the container to make changes to the file system without modifying the underlying image.

Docker images are also versioned, allowing different versions of an image to be stored and managed. This makes it easy to roll back to a previous version of an image or to test new features in a separate version of an image before deploying them to production.

Again, pair that with the How Docker images work page from Microsoft Learn, and I'm getting a well-rounded understanding of the theory behind Docker.

Building an image

Based on what I've learnt from the above, I know that I need to create a Dockerfile in order to build an image from which I can spin-up a container. Let's see what ChatGPT gives me:

Dan: Make me a simple web page running from a Dockerfile

ChatGPT:

Sure, here is an example of a simple

Dockerfilefor a web page using NGINX web server:# Use the official NGINX image as the base image FROM nginx:latest # Copy the web page to the container COPY index.html /usr/share/nginx/html # Expose the port that the web server will run on EXPOSE 80This

Dockerfilestarts by using the official NGINX image as the base image. Then it copies theindex.htmlfile to the/usr/share/nginx/htmldirectory in the container. TheEXPOSEcommand makes the port 80 available to the host.This index.html file can be a simple html file that contains the content of the web page you want to run.

You can then use the

docker buildcommand to build an image from thisDockerfile:docker build -t my-web-page-image .This will create an image named

my-web-page-image. You can then use thedocker runcommand to start a container from this image:docker run -p 80:80 my-web-page-imageThis will start the NGINX web server, and you will be able to access the web page by visiting

http://localhostin your web browser.Please note that the above example is a very basic one, and you may want to customize the

Dockerfileand the web page according to your needs, such as adding more files to the container, configuring the web server, and running custom commands.

How cool is that!

Ok, this example won't quite work as it's not told us to create an index.html page, but I can do that manually. Doing so allows the image to be built, and by following the instructions to run the container, I get:

Amazing!

Before I move on, I wanted to cover off the ChatGPT reference to the Dockerfile base image, as this seems to go against the What is a base image? section of the Microsoft Learn article I was reading.

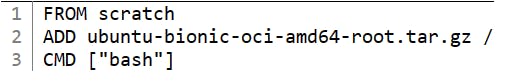

Reading up on this via Microsoft Learn, you can get either base images or parent images. The base image, called scratch, is effectively an empty container that you can build upon. The parent image is an image that already exists that is built upon the base image.

If I were to specify FROM ubuntu:18.04 in a Dockerfile, it would be using Ubuntu as a parent image (with the tag of :18.04 - more on that in a bit). As we've not specified where exactly we are pulling the Ubuntu image from, it defaults to the public Docker Hub repository for Ubuntu, here. What's interesting is that if I inspect the 18:04 release, I can see that this parent image uses the base called scratch.

Cool! I didn't know that.

At this point, I can build a very basic image and run it, all thanks to ChatGPT and a little common sense.

Image Tags

I touched on image tags a moment ago, so let's see what ChatGPT has to say about them.

Dan: Tell me about Docker image tags

ChatGPT:

Docker images are identified by a combination of their repository name and a tag. The tag is a string that is used to differentiate different versions of an image.

For example, the image

nginx:latestrefers to the latest version of the Nginx image in the official Nginx repository. Similarly,nginx:1.19.5refers to version 1.19.5 of the Nginx image.By default, when you use the

docker pullcommand to download an image, it will download the latest version of the image, which is tagged with thelatesttag. You can also specify a specific tag to download using thedocker pullcommand.When you build a new image using the

docker buildcommand, you can use the-toption to specify a repository name and a tag for the image.For example, the following command will build an image from the current directory and tag it as

my-image:v1.0:docker build -t my-image:v1.0 .It's common to use semantic versioning for tagging images, for example

my-image:1.0.0ormy-image:1.0.0-betaYou can also use the

docker tagcommand to add or remove tags from an existing image. For example, the following command will create a new tagmy-image:v2.0that refers to the same image asmy-image:v1.0:docker tag my-image:v1.0 my-image:v2.0Tagging images is useful for many reasons, including:

Versioning your images

Keeping track of the different stages of your image development

Allowing users to choose which version of the image they want to use

You can also use multiple tags to different images, for example, you can tag an image as

my-image:v1.0,my-image:v1.0-beta,my-image:latestand so on.

Interesting... Microsoft Learn tells me that:

An image is labeled with the

latesttag if you don't specify a tag.

ChatGPT takes that further by stating that the latest tag can also be used (when pulling a remote image, for example) to take the most recent build. I've always been a bit fuzzy on the exact use of the latest tag with Docker, so this makes sense.

To prove this, I ran the below:

docker run -it ubuntu:latest

This pulls the latest Ubuntu image from Docker Hub and the -it flags automatically connects me to the bash shell once the container is running.

cat etc/os-release

Running the below yields: VERSION_ID="22.04" which based on the below (from Docker Hub) is tagged as the latest build.

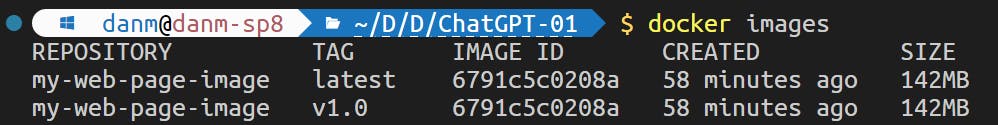

However, if I tag the image we created earlier, by running:

docker tag my-web-page-image my-web-page-image:v1.0

I'm left with two images, one tagged with latest and one with v1.0:

This will be because the original image was not tagged and so defaulted to latest.

Therefore, if I ever want to use the latest tag, I need to be really careful with its use. You can apply more than one tag to an image, so I could keep organised, but this feels messy. Let's see what ChatGPT thinks.

Dan: Is using the latest tag on a docker image best practice?

ChatGPT:

Using the "latest" tag on a Docker image is a common practice, but it may not always be the best practice.

The "latest" tag is often used to refer to the most recent version of an image. This can be useful for development and testing, as it allows you to easily pull the newest version of an image without having to keep track of specific version numbers.

However, in production environments, it is generally considered better practice to use specific version tags rather than the "latest" tag. This is because using specific version tags allows you to have more control over which version of an image is being used, and prevents unexpected changes from being deployed.

Additionally, when you use versioned tags, it's easier to rollback to previous versions in case you need to.

It's important to keep in mind that the "latest" tag is not immutable and it will change with each new build, so it's best to use version tags that are specific and fixed, such as "v1.0" or "v2.1". This way you can ensure that you are deploying exactly the version you have tested and validated, and avoid any unexpected behavior.

Running a container

I've already had a go at running a container interactively (using the -it flag) and in the foreground as it served a simple webpage, but I know there is more to it than that

Dan: How can I run Docker containers?

ChatGPT:

You can use the docker run command to run a new container from an image. The syntax for the docker run command is as follows:

docker run -d nginxThe

-doption specifies that the container should run in detached mode, which means it will run in the background.You can also use the

-poption to map a container's port to a host port, for example, the following command maps the container's port 80 to the host's port 8080:docker run -d -p 8080:80 nginxYou can also use the

-voption to mount a host directory as a volume in the container. This can be useful to share data between the host and the container.For example, the following command mounts the host directory

/dataas a volume in the container at the path/data:docker run -d -v /data:/data nginxYou can also use

-eoption to pass environment variable to the containerAlso, you can use the

docker container runcommand which is an alias fordocker run, the syntax is the same.If you want to run a command on the container instead of running the default command that's defined in the image, you can specify the command after the image name, for example:

docker run -it ubuntu bashThis command will run an interactive shell in an ubuntu container, this way you can see the container output, and interact with it.

Once you're done with a container, you can stop it with the

docker stopcommand, and you can remove it with thedocker rmcommand.

Oh, nice! So this response covered (at a very high level) several of the topics on my list, such as mapping a volume and opening a port. I now also know how to pass in environment variables and run a detached container

Adding storage

Dan: Tell me about Docker storage

ChatGPT:

Docker storage refers to how images and containers are stored in a Docker environment. The main components of Docker storage are:

Docker images: These are snapshots of containers that can be stored and reused.

Docker volumes: Volumes are separate directories within a Docker host that can be used to store persistent data separate from the container's filesystem.

Docker bind mounts: These are used to mount a host directory into a container.

Docker tmpfs mounts: These are used to mount a tmpfs filesystem into a container, which is stored in RAM.

By default, Docker uses a storage driver to manage the storage of images and containers. The most commonly used storage driver is the overlay2 driver, which uses a union filesystem to combine multiple layers of an image into a single filesystem. Other storage drivers include devicemapper and btrfs.

It is possible to configure the Docker storage driver and other storage-related options in the Docker daemon configuration file, and you can also use the Docker CLI to manage volumes, bind mounts, and tmpfs mounts.

I turned to Microsoft Learn for a bit more detail on this one. Here are my main takeaways:

Container storage is temporary.

A volume is stored on the host filesystem at a specific folder location.

A bind mount is conceptually the same as a volume, however, instead of using a specific folder, you can mount any file or folder on the host. This is best used when a container and the host need to share the same files.

Bind mount data is expected to be updated by the host. Volume data isn't meant to be updated by the host.

Networking

Dan: Tell me about Docker networking

ChatGPT:

Docker networking allows containers to communicate with each other and with the host system. It uses a software-defined network that is isolated from the host network. The default network mode is "bridge," which provides a network namespace for containers, with a virtual Ethernet bridge connecting containers to each other and to the host network. You can also use other network modes like "host" or "none." Docker also provides options for customizing networks, such as adding IP addresses, hostnames, and network-level routing.

A bit brief again! Let's turn to Microsoft Learn for a bit more detail on this one. Here are my main takeaways:

Docker provides three pre-configured network configurations: Bridge, Host, and None.

The bridge network is the default. This network is an internal, private network used by the container, and isolates the container network from the Docker host.

The host network enables you to run the container on the host network directly.

In our example above:

docker run -d -p 8080:80 nginxport 8080 is the host port which is mapped to port 80 on the container port via the bridge network. If we used the host network, we could just use port 80 as standard without the need for mapping.

Dan: Can Docker containers talk to each other?

ChatGPT:

Yes, Docker containers can communicate with each other. By default, containers are connected to a shared network namespace and can communicate with each other using their IP addresses. In order to allow communication between containers, you can either connect them to the same network or expose the necessary ports to the host network. Additionally, you can also use Docker's network service discovery feature to automatically discover and connect containers using their service names.

Dan: How do you expose ports on Docker containers?

ChatGPT:

To expose ports on a Docker container, you can use the "--publish" or "-p" option while running the container. The syntax is:

docker run -p host_port:container_port image_nameWhere "host_port" is the port number on the host system that will be mapped to the "container_port" on the container. For example:

docker run -p 8080:80 nginxThis will run an nginx container and map port 80 inside the container to port 8080 on the host system, allowing incoming traffic on host_port 8080 to be sent to the container_port 80.

Dan: Do Docker containers get an IP address?

ChatGPT:

Yes, Docker containers are assigned an IP address when they are connected to a network. By default, when you run a container, it is attached to a virtual network named "bridge", and each container is assigned a unique IP address within this network. The IP address is used to communicate with other containers and the host system. The IP address can be discovered using the "docker inspect" command, or by inspecting the network settings of the container from within the container itself.

In addition to this, I've read that you can connect Docker containers to an Azure virtual network using a plugin called Azure CNI.

Dan: Tell me about the Azure CNI plugin for Docker?

ChatGPT:

The Azure Container Networking Interface (CNI) plugin for Docker is a network plugin for Docker that provides network connectivity for containers running on Azure Container Instances. The Azure CNI plugin integrates with Azure virtual network infrastructure to provide a highly available, scalable, and secure network for containers. With the Azure CNI plugin, you can create isolated, private networks for your containers, and assign them IP addresses from your virtual network's address space. You can also configure custom DNS settings and network security policies, such as network security groups, to control access to your containers. The Azure CNI plugin is designed to work with the Docker CLI and is compatible with standard Docker networking commands, making it easy to use with existing Docker workflows.

I backed this up with the below articles:

From what I've read, the main benefit of the Azure CNI plugin is to give a container a specific internal IP address, assigned from an Azure virtual networking. This would allow it to communicate with other resources on the same (or peered) network

Accessing a running container

Dan: How do I access a running Docker container?

ChatGPT:

There are several ways to access a running Docker container:

- Executing a command in an existing container: You can use the "docker exec" command to run a command in an existing container. For example:

docker exec -it container_name command

- Logging into a container: If the container is running a Linux operating system, you can use the "docker exec" command to open a shell in the container. For example:

docker exec -it container_name bash

Connecting to a container's network: If the container exposes a network service, such as a web server, you can access it by connecting to its IP address or hostname. The IP address and port can be discovered using the "docker inspect" command.

Mapping container ports to host ports: You can use the "--publish" or "-p" option while running the container to map a container port to a host port. For example:

docker run -p host_port:container_port image_nameThis makes the container's network services accessible on the host system at the specified host port.

We've already seen in earlier examples how to access a container on an open port, and using the -it flag. The exec flag is a new for me, although I'm not sure what the use-case is for it. If you're writing a Dockerfile, you'd be better off defining the command there, so perhaps this is more of a CLI based one-off actions.

Logs

We've seen earlier how to run a detached container, and most recently, how to run a one-off command on it. So how do we see what's going on under the hood?

Dan: How do I get logs from a Docker container?

ChatGPT:

To get logs from a detached Docker container, you can use the

docker logscommand followed by the container name or container ID.For example, if the container name is "mycontainer", you would run:

docker logs mycontainerYou can also use the container ID instead of the name. If you're not sure of the container name or ID, you can use the

docker pscommand to list all of your running containers and their associated names and IDs.If you want to see logs in real-time, you can use

-f(follow) option in the logs commanddocker logs -f mycontainerIt's also possible to configure the container to write the logs to a file or a remote log service using tools such as syslog-ng, rsyslog, or fluentd. This way you can have a centralized logging solution, which can be useful when you have multiple containers running or when you want to keep the logs for a longer period of time.

Docker Hub

Dan: How do I push an image to Docker Hub?

ChatGPT:

To push an image to Docker Hub, you need to follow these steps:

Log in to Docker Hub:

docker loginTag the image with your Docker Hub username and repository name:

docker tag <image_name> <docker_hub_username>/<repository_name>:<tag>Push the image to Docker Hub:

docker push <docker_hub_username>/<repository_name>:<tag>

It's important to note here that if you are using Docker Hub, you should ideally have enabled Two-Factor Authentication. If you have, in order to login via the CLI command docker login - you'll need to create an Access Token, and use that in-place of your regular password. You can set all that up here.

Dan: How do I pull an image from Docker Hub?

ChatGPT:

To pull an image from Docker Hub, you can use the following command:

docker pull <docker_hub_username>/<repository_name>:<tag>Replace<docker_hub_username>/<repository_name>:<tag>with the appropriate image name, username, repository name and tag.

If you are pulling from a private Docker Hub repository, you will need to run docker login before running the docker pull command. The above also highlights the importance of tags, as mentioned earlier.

Azure Container Registry

Dan: What is the difference between Docker Hub and Azure Container Registry?

ChatGPT:

Docker Hub and Azure Container Registry (ACR) are both container registry services, but they have some differences:

Hosting: Docker Hub is a public registry hosted by Docker, Inc. ACR is a private registry hosted by Microsoft in the Azure cloud.

Accessibility: Docker Hub is publicly accessible, while ACR is private and requires an Azure subscription.

Cost: Docker Hub has a free plan with limited features and storage, while ACR charges based on the number of storage transactions and data transferred.

Security: Docker Hub has basic security features, while ACR provides advanced security features such as Azure Active Directory authentication, Azure Policy for policy enforcement, and more.

Integration: Docker Hub can be integrated with other services, while ACR integrates natively with Azure services and tools.

Scalability: Docker Hub has limited scalability, while ACR can scale to meet the demands of large enterprises.

In general, Docker Hub is a good choice for open-source projects and small-scale applications, while ACR is better suited for enterprise-level applications and DevOps teams that need more security and control over their container images.

I love that ChatGPT just tells me this stuff without having to even open a webpage!

Dan: How do I push an image to Azure Container Registry?

ChatGPT:

To push an image to Azure Container Registry (ACR), you need to follow these steps:

Log in to your Azure account:

az loginCreate an ACR instance:

az acr create --name <acrName> --resource-group <resourceGroupName> --sku BasicLog in to the ACR instance:

az acr login --name <acrName>Tag the image with the ACR login server name:

docker tag <image_name> <acrLoginServer>/<repository_name>:<tag>Push the image to ACR:

docker push <acrLoginServer>/<repository_name>:<tag>Replace the variables in angle brackets with the appropriate values.

Dan: How do I pull an image from Azure Container Registry?

ChatGPT:

To pull an image from Azure Container Registry (ACR), you need to follow these steps:

Log in to your Azure account:

az loginLog in to the ACR instance:

az acr login --name <acrName>Pull the image from ACR:

docker pull <acrLoginServer>/<repository_name>:<tag>Replace the variables in angle brackets with the appropriate values.

According to Microsoft Learn, you can also use the docker login command to log in to an ACR, for example: docker login myregistry.azurecr.io

Running multiple containers together

Dan: How do I run multiple Docker containers together?

ChatGPT:

There are several ways to run multiple Docker containers together:

Docker Compose: You can use Docker Compose to define and run multi-container applications. You create a

docker-compose.ymlfile that specifies the services, networks, and volumes for your application, then use thedocker-compose upcommand to start the containers.Docker Swarm: You can use Docker Swarm to run and manage a swarm of Docker nodes as a single virtual system. You create a swarm, deploy services to the swarm, and manage and scale the services.

Kubernetes: You can use Kubernetes to automate the deployment, scaling, and management of containerized applications. You create a deployment that specifies the desired state of your containers, and Kubernetes manages the containers to meet that state.

Each of these methods provides a different way to run multiple containers together, so you should choose the method that is best suited to your needs. Docker Compose is the simplest and easiest to use, while Kubernetes provides the most advanced features for running containers at scale.

Dan: Show me a Docker Compose example using Azure Container Registry

ChatGPT:

Here's an example of a

docker-compose.ymlfile that runs two services using images stored in Azure Container Registry (ACR):version: '3' services: web: image: <acrLoginServer>/<web_image>:<tag> ports: - "80:80" db: image: <acrLoginServer>/<db_image>:<tag> environment: MYSQL_ROOT_PASSWORD: <password>This

docker-compose.ymlfile defines two services,webanddb. Thewebservice uses an image stored in ACR and maps port 80 on the host to port 80 in the container. Thedbservice uses an image stored in ACR and sets theMYSQL_ROOT_PASSWORDenvironment variable.Before you run the

docker-compose upcommand, you need to log in to the ACR instance using theaz acr logincommand. Then, you can start the containers using the following command in the same directory as thedocker-compose.ymlfile:

docker-compose upThis will start both containers and run them in the foreground. To run the containers in the background, use the

-doption:

docker-compose up -d

Dan: What is the difference between Docker Compose and Docker Swarm?

ChatGPT:

Docker Compose and Docker Swarm are both tools for managing multiple Docker containers. However, they have different goals and use cases.

Docker Compose: is a tool for defining and running multi-container Docker applications. It provides a simple way to define the services, networks, and volumes for your application in a

docker-compose.ymlfile, then use thedocker-compose upcommand to start and stop the containers. Docker Compose is easy to use and is well suited for development and testing environments.Docker Swarm: is a tool for orchestrating and managing a swarm of Docker nodes as a single virtual system. You can use Docker Swarm to deploy services to a swarm of nodes, manage and scale the services, and manage the swarm as a whole. Docker Swarm provides advanced features for running containers in production, such as swarm-level networking, service discovery, and rolling updates.

In summary, Docker Compose is a simple and easy-to-use tool for running multi-container applications, while Docker Swarm provides advanced features for running containers at scale in production environments.

Container security

Dan: Tell me about Docker container security

ChatGPT:

Docker containers are isolated from the host operating system, but it is still important to properly secure them to prevent unauthorized access and data breaches. Here are some steps you can take to improve the security of your Docker containers:

Use official and verified images: Always use official images from a trusted repository like Docker Hub or official repository of the respective application.

Keep your images and host updated: Regularly update your containers and host operating system to fix any security vulnerabilities.

Limit privileges: Use the

--useroption in thedocker runcommand to run the container with a non-root user with minimal permissions.Apply least privilege principle: Grant containers the minimum permissions necessary to do their job.

Network security: Be mindful of the network and ports that your containers are exposed to. If possible, use a private network for communication between containers and only expose necessary ports to the outside world.

Logging and monitoring: Keep track of the logs generated by your containers to detect and respond to security incidents.

Use a container security tool: There are several tools available for securing Docker containers, such as Aqua Security, Sysdig, and StackRox, among others.

By following these best practices, you can help improve the security of your Docker containers and prevent security incidents.

Dan: How do I scan a Docker image?

ChatGPT:

You can scan a Docker image for vulnerabilities using a vulnerability scanner. Some popular vulnerability scanners for Docker images are:

Clair by CoreOS

Aqua Security

Synk by Snyk

You can run these scanners on the host or in a separate container and scan the images in your local Docker registry. The scan results will provide a report on known vulnerabilities and recommendations on how to remediate them.

As I've been running a lot of these Docker CLI commands locally, every now and again Docker will prompt me to run a docker scan command against an image. According to the docs, docker scan uses Snyk to scan an image for known vulnerabilities.

You need to login to Docker Hub before you can run the command.

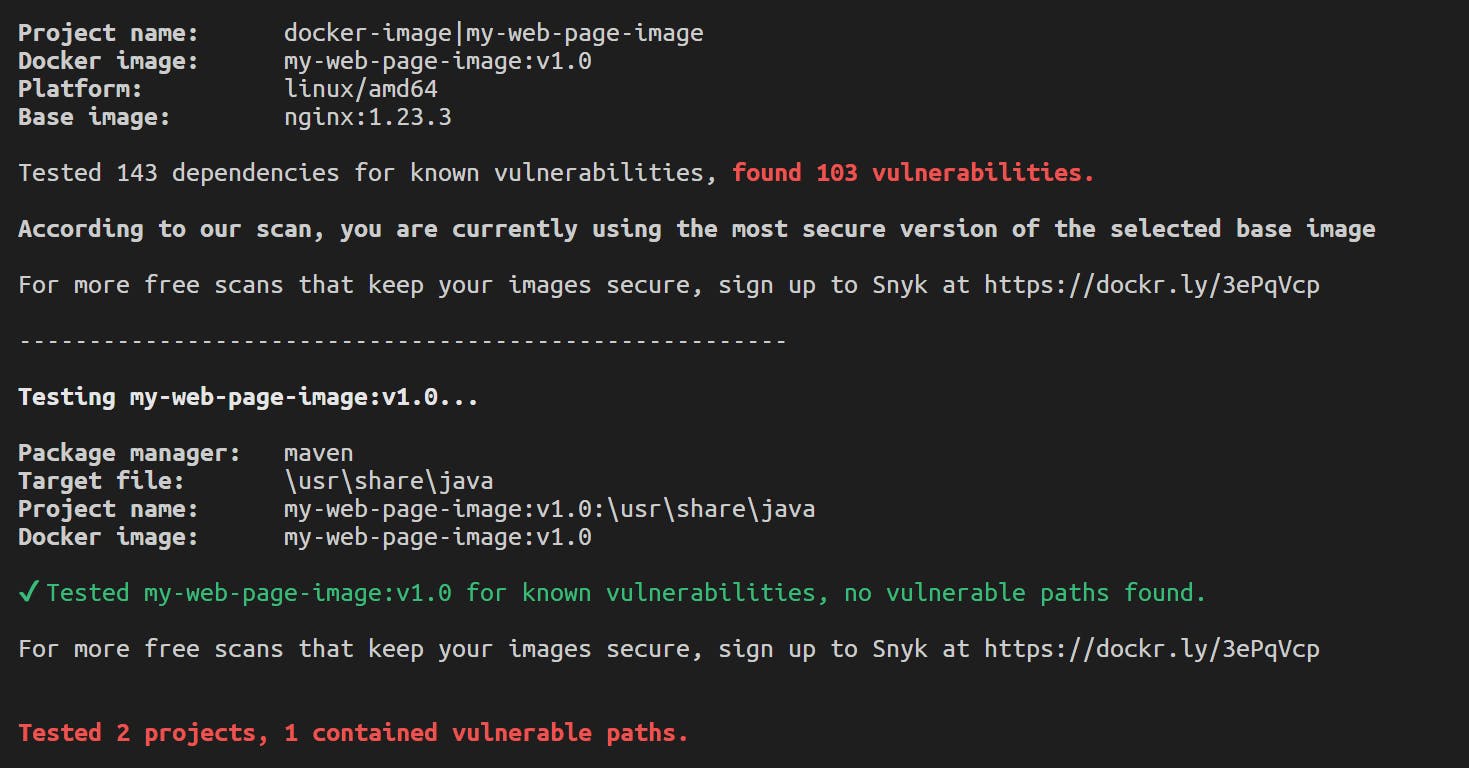

I ran the below against an image I created in an earlier example:

docker scan my-web-page-image:v1.0

The output was incredibly detailed:

So detailed in fact, I'm not really sure what to do with this information! I'm presuming the dependency vulnerabilities come from the Nginx parent image, as all I'm doing here is pulling that, copying over a simply html file and exposing port 80.

I'm not going to fall down this rabbit hole right now as to keep this article aligned with it's context. This is certainly an idea I need to revisit though, especially once I progress on running containers in Azure.

My friend Richard Hooper has an article on using Trivy with containers with ACR (to compare and contrast with Snyk):

Use Trivy and Azure DevOps to scan container images for Vulnerabilities

Are there any Azure native solutions to meet this requirement?

Dan: Can you use Defender for Cloud with Docker images?

ChatGPT:

Microsoft Defender for Cloud can be used to secure Docker images in the following ways:

Scanning images in Azure Container Registry: Defender for Cloud can scan images stored in Azure Container Registry for vulnerabilities and threats.

Securing Kubernetes clusters: Defender for Cloud can also be used to secure Kubernetes clusters, where the Docker images can run as containers in the cluster.

Integrating with CI/CD pipelines: Defender for Cloud can be integrated into the CI/CD pipeline to scan images for vulnerabilities and threats before they are deployed.

By using Defender for Cloud, you can enhance the security of your Docker images and applications running in the cloud.

Well..... Microsoft Defender for container registries has actually been deprecated and has since been replacted by Microsoft Defender for Containers.

CI/CD

Defining a Docker image within a Dockerfile, or linking multiple containers together in a Docker Compose file allows us to save these artifcats as code. These files can be placed into source control, such as GitHub or Azure Repos for example.

Having these files saved into source control then allows us to trigger pipelines based on changes, for example, building a new version of an image every time as code change is made to the file.

Let's see what ChatGPT can tell us about using pipelines to build an image:

Dan: Show me how to build a Docker image in GitHub Actions

ChatGPT:

Here is a sample workflow to build a Docker image in GitHub Actions:

name: Build Docker Image on: push: branches: - main env: DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }} DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }} IMAGE_NAME: myimage jobs: build: runs-on: ubuntu-latest steps: - name: Checkout code uses: actions/checkout@v2 - name: Build Docker Image uses: docker/build-push-action@v2 with: context: . push: true tags: ${{ env.IMAGE_NAME }} username: ${{ env.DOCKER_USERNAME }} password: ${{ env.DOCKER_PASSWORD }}This workflow listens to pushes to the main branch and triggers the build job. The job checks out the code, builds the Docker image, and pushes it to a Docker registry, such as Docker Hub, with the provided username and password. The name of the image is defined in the

IMAGE_NAMEenvironment variable. The Docker username and password are stored as secrets in the GitHub repository.

The only thing I'd add to this is the importance of image tagging, and to increment the tags to denote new releases. There appears to be multiple ways to do this, however I imagine this is a project specific requirement.

Dan: Show me how to build a Docker image in Azure DevOps Pipelines

ChatGPT:

Here is a sample YAML pipeline to build a Docker image in Azure DevOps Pipelines:

pool: vmImage: 'ubuntu-latest' steps: - task: Docker@2 displayName: 'Build Docker Image' inputs: command: 'build' arguments: '--tag myimage:$(Build.BuildId) .' - task: Docker@2 displayName: 'Push Docker Image' inputs: command: 'push' arguments: 'myimage:$(Build.BuildId)' containerRegistry: 'dockerhub' tags: | $(Build.BuildId)This pipeline runs on an Ubuntu virtual machine and has two tasks: building the Docker image and pushing it to a Docker registry, such as Docker Hub. The Docker image is built with a tag that includes the build ID and the

.context. The Docker registry connection is defined in thecontainerRegistryinput, and the image tags are defined in thetagsinput. You can replace thedockerhubregistry with your own registry connection.

Note that this uses the BuildId as an image tag. Interesting!

Let's push the boat out and see what else ChatGPT can give us here:

Dan: Show me how to build a Docker image in Azure DevOps Pipelines using ACR with vulnerability scanning using Microsoft Defender for Containers in YAML

I had to be very specific with the above question, as I had a variety of results, some of which showed deprecated info.

ChatGPT:

Here is an example of a YAML pipeline for building a Docker image in Azure DevOps Pipelines using ACR with vulnerability scanning using Microsoft Defender for Containers:

trigger: - master pool: vmImage: 'ubuntu-latest' steps: - task: Docker@2 displayName: 'Build Docker Image' inputs: command: 'build' tags: $(ACRName).azurecr.io/image:$(Build.BuildId) - task: AzureCLI@2 displayName: 'Push Docker Image to ACR' inputs: azureSubscription: '<Azure Subscription Name>' scriptType: 'ps' scriptLocation: 'inlineScript' inlineScript: | az acr login --name $(ACRName) docker push $(ACRName).azurecr.io/image:$(Build.BuildId) - task: DefenderForContainers@0 displayName: 'Scan Image for Vulnerabilities' inputs: azureSubscription: '<Azure Subscription Name>' containerRegistry: '$(ACRName)' image: 'image:$(Build.BuildId)' severityThreshold: 'high'Note: Replace

<Azure Subscription Name>with the name of your Azure subscription, and replace$(ACRName)with the name of your Azure Container Registry. You may also adjust the severity threshold for the vulnerability scan as needed.

You can then run the cmd az acr vulnerability-report in order to export the scan output.

Windows vs Linux

Everything I've done thus far has been using Docker Desktop on Windows, but with Linux based images. I've seem from the Docker Desktop settings that I can switch from Linux to Windows (below) but how does this work elsewhere?

Dan: In Docker, how do you switch between Linux and Windows based images?

ChatGPT:

Docker runs on a host operating system, and the containers it runs can be based on either Linux or Windows operating systems. To switch between Linux and Windows based images in Docker, you need to have a host operating system that supports the desired container operating system.

For example, if you have a Windows-based host, you can run Linux-based containers by using a virtualization technology like Hyper-V to create a Linux virtual machine. The Docker engine can then be installed on this virtual machine and used to run Linux-based containers.

Conversely, if you have a Linux-based host, you can run Windows-based containers by installing the Docker engine on the host and using it to run Windows-based containers. However, to run Windows-based containers, the host operating system must be running Windows Server 2016 or later, and the Docker engine must be running the Windows Server version of Docker.

In conclusion, you can switch between Linux and Windows-based images in Docker by having a host operating system that supports the desired container operating system and installing the appropriate version of the Docker engine.

Docker in Azure

Dan: How can you use Docker in Azure?

ChatGPT:

There are several ways to use Docker in Microsoft Azure:

Azure Container Instances (ACI): Azure Container Instances is a fully managed service that allows you to run Docker containers in Azure without having to manage any infrastructure. You simply upload your Docker images to ACI, and it takes care of the rest, including provisioning, deploying, and scaling your containers.

Azure Kubernetes Service (AKS): Azure Kubernetes Service is a managed Kubernetes service that allows you to run and manage Docker containers at scale. With AKS, you can deploy, manage, and scale your Docker containers using Kubernetes, the popular open-source orchestration platform.

Azure App Service: Azure App Service is a fully managed platform for building, deploying, and scaling web applications and API's. You can deploy your Docker images to App Service using a Container option, which provides a fully managed experience for running containers in production.

Azure DevOps: Azure DevOps is a suite of tools for planning, developing, testing, and delivering software. You can use Azure DevOps to automate the build and deployment of your Docker images to any of the above platforms or any other infrastructure that supports Docker.

In conclusion, you can use Docker in Azure in various ways, including Azure Container Instances, Azure Kubernetes Service, Azure App Service, and Azure DevOps.

An interesting to answer. It's missed off Azure Container Apps, Azure COntainer Registry, and running a Docker host on a Virtual Machine.

Conclusion

Dan: Write me a summary for a blog article about using ChatGPT to learn Docker

ChatGPT:

In this blog article, we discuss the benefits of using OpenAI's ChatGPT language model to learn Docker. ChatGPT is a highly capable language model that can be used to answer questions and provide information on a wide range of topics, including Docker. By using ChatGPT to learn Docker, users can receive quick and concise answers to their questions, which can help them to quickly get up to speed with this powerful containerization platform. Additionally, ChatGPT can provide tips and guidance on how to perform specific tasks in Docker, such as building images, deploying containers, and managing containers in production. With ChatGPT, users can also receive real-time feedback on their questions, which can help them to better understand the concepts and techniques involved in using Docker. Overall, using ChatGPT to learn Docker is an effective and efficient way to gain a deep understanding of this platform, and can help users to quickly become proficient in using it.

I mean, ChatGPT has summed up this article better than I could have at this point!

Using ChatGPT in this specific context has saved me a lot of time by removing the need to use a search engine, parse the results by opening and skimming multiple web pages, then writing up my findings.

Yes, using ChatGPT to essentially co-author a blog article is absolutely cheating! To be clear, I'm not trying to pass off the ChatGPT output as my own work, and if you recall the article introduction, I'm using ChatGPT as a producitvity tool. It has certainly boosted my productivity!

Dan: Say goodbye to all my readers!

ChatGPT:

Goodbye readers! Thank you for taking the time to read this article. If you have any more questions or would like to learn more about using ChatGPT to learn Docker, feel free to reach out. Have a great day!

Amazing!